Hi @rod ,

Thank you for the reply, I think I found why it doesn’t show nvlink here is the steps to produce: ( changed the size from the example N only )

First generate this file inside of a folder p2p_copy.cpp:

// REQUIRES: cuda || hip || level_zero

// RUN: %{build} -o %t.out

// RUN: %{run} %t.out

#include <cassert>

#include <numeric>

#include <sycl/sycl.hpp>

#include <vector>

using namespace sycl;

// Array size to copy

constexpr int N = 1000000;

int main() {

auto Devs = sycl::device::get_devices(info::device_type::gpu);

if (Devs.size() < 2) {

std::cout << "Cannot test P2P capabilities, at least two devices are "

"required, exiting."

<< std::endl;

return 0;

}

std::vector<sycl::queue> Queues;

std::transform(Devs.begin(), Devs.end(), std::back_inserter(Queues),

[](const sycl::device &D) { return sycl::queue{D}; });

////////////////////////////////////////////////////////////////////////

if (!Devs[0].ext_oneapi_can_access_peer(

Devs[1], sycl::ext::oneapi::peer_access::access_supported)) {

std::cout << "P2P access is not supported by devices, exiting."

<< std::endl;

return 0;

}

// Enables Devs[0] to access Devs[1] memory.

Devs[0].ext_oneapi_enable_peer_access(Devs[1]);

std::vector<int> input(N);

std::iota(input.begin(), input.end(), 0);

int *arr0 = malloc<int>(N, Queues[0], usm::alloc::device);

Queues[0].memcpy(arr0, &input[0], N * sizeof(int));

int *arr1 = malloc<int>(N, Queues[1], usm::alloc::device);

// P2P copy performed here:

Queues[1].copy(arr0, arr1, N).wait();

int out[N];

Queues[1].copy(arr1, out, N).wait();

sycl::free(arr0, Queues[0]);

sycl::free(arr1, Queues[1]);

bool ok = true;

for (int i = 0; i < N; i++) {

if (out[i] != input[i]) {

printf("%d %d\n", out[i], input[i]);

ok = false;

break;

}

}

printf("%s\n", ok ? "PASS" : "FAIL");

return 0;

}

Then compiling it with ( Intel(R) oneAPI DPC++/C++ Compiler 2024.0.0 (2024.0.0.20231017) ):

icpx -fsycl -fsycl-targets=nvptx64-nvidia-cuda p2p_copy.cpp

nsys profile --stats=true ./a.out

Prints out :

SYCL_PI_TRACE[basic]: Plugin found and successfully loaded: libpi_opencl.so [ PluginVersion: 14.37.1 ]

SYCL_PI_TRACE[basic]: Plugin found and successfully loaded: libpi_cuda.so [ PluginVersion: 14.38.1 ]

SYCL_PI_TRACE[basic]: Plugin found and successfully loaded: libpi_unified_runtime.so [ PluginVersion: 14.37.1 ]

PASS

Generating '/scratch/hpc-prf-agraph/agraph01_tmp/nsys-report-ea80.qdstrm'

[1/8] [========================100%] report3.nsys-rep

[2/8] [========================100%] report3.sqlite

[3/8] Executing 'nvtx_sum' stats report

SKIPPED: /scratch/hpc-prf-agraph/p2p-test/report3.sqlite does not contain NV Tools Extension (NVTX) data.

[4/8] Executing 'osrt_sum' stats report

Time (%) Total Time (ns) Num Calls Avg (ns) Med (ns) Min (ns) Max (ns) StdDev (ns) Name

-------- --------------- --------- ------------ ------------ --------- ----------- ------------ ----------------------

56.2 652,722,511 31 21,055,564.9 15,388,416.0 1,580 100,158,554 29,951,403.1 poll

40.6 472,348,335 1,424 331,705.3 14,200.0 1,010 27,697,419 1,302,138.7 ioctl

2.0 22,669,132 86 263,594.6 3,205.0 1,290 11,163,582 1,687,697.1 fopen

0.6 6,744,637 1 6,744,637.0 6,744,637.0 6,744,637 6,744,637 0.0 dup

0.2 2,642,132 80 33,026.7 7,385.0 6,140 814,131 118,344.2 mmap64

0.1 1,431,074 24 59,628.1 45,570.0 23,440 370,980 66,964.6 sem_timedwait

0.1 1,165,702 5 233,140.4 210,021.0 143,181 319,610 69,354.3 pthread_create

0.1 715,443 27 26,497.9 4,380.0 1,200 163,720 43,134.2 fopen64

0.0 492,904 130 3,791.6 3,175.0 1,111 13,870 1,622.8 open64

0.0 481,431 2 240,715.5 240,715.5 196,340 285,091 62,756.4 connect

0.0 207,480 28 7,410.0 7,425.0 1,000 17,120 6,142.7 read

0.0 201,413 30 6,713.8 4,275.5 1,240 29,940 6,857.1 mmap

0.0 123,700 53 2,334.0 1,420.0 1,009 16,150 2,955.9 fclose

0.0 94,470 1 94,470.0 94,470.0 94,470 94,470 0.0 pthread_cond_wait

0.0 69,119 2 34,559.5 34,559.5 34,409 34,710 212.8 fgets

0.0 49,100 10 4,910.0 4,585.0 1,900 11,920 2,836.7 open

0.0 36,367 23 1,581.2 1,420.0 1,000 3,410 547.1 write

0.0 32,000 10 3,200.0 3,190.0 1,800 5,790 1,242.1 munmap

0.0 26,241 4 6,560.3 6,520.0 3,891 9,310 2,735.6 fread

0.0 20,320 3 6,773.3 4,740.0 3,760 11,820 4,397.9 socket

0.0 15,780 3 5,260.0 6,300.0 1,730 7,750 3,141.9 pipe2

0.0 10,241 3 3,413.7 3,071.0 1,780 5,390 1,829.2 fwrite

0.0 5,080 2 2,540.0 2,540.0 1,220 3,860 1,866.8 pthread_cond_broadcast

0.0 1,370 1 1,370.0 1,370.0 1,370 1,370 0.0 bind

0.0 1,040 1 1,040.0 1,040.0 1,040 1,040 0.0 fcntl

[5/8] Executing 'cuda_api_sum' stats report

Time (%) Total Time (ns) Num Calls Avg (ns) Med (ns) Min (ns) Max (ns) StdDev (ns) Name

-------- --------------- --------- --------- --------- -------- -------- ----------- -------------------

55.5 1,147,711 3 382,570.3 234,850.0 14,060 898,801 460,497.2 cuMemcpyAsync

25.6 528,571 2 264,285.5 264,285.5 113,060 415,511 213,865.2 cuMemAlloc_v2

10.9 225,320 2 112,660.0 112,660.0 110,350 114,970 3,266.8 cuMemFree_v2

4.8 98,800 2 49,400.0 49,400.0 2,390 96,410 66,482.2 cuEventSynchronize

0.8 17,410 3 5,803.3 4,250.0 2,450 10,710 4,343.6 cuStreamCreate

0.8 15,950 3 5,316.7 4,300.0 3,560 8,090 2,430.1 cuStreamDestroy_v2

0.6 11,780 5 2,356.0 1,550.0 940 4,100 1,584.7 cuEventRecord

0.4 8,441 3 2,813.7 2,700.0 1,541 4,200 1,333.1 cuStreamSynchronize

0.3 6,309 14 450.6 235.0 140 2,480 617.4 cuCtxSetCurrent

0.2 4,480 5 896.0 930.0 370 1,330 432.8 cuEventCreate

0.1 1,450 3 483.3 430.0 350 670 166.5 cuEventDestroy_v2

[6/8] Executing 'cuda_gpu_kern_sum' stats report

SKIPPED: /scratch/hpc-prf-agraph/p2p-test/report3.sqlite does not contain CUDA kernel data.

[7/8] Executing 'cuda_gpu_mem_time_sum' stats report

Time (%) Total Time (ns) Count Avg (ns) Med (ns) Min (ns) Max (ns) StdDev (ns) Operation

-------- --------------- ----- --------- --------- -------- -------- ----------- ----------------------------

67.6 609,984 1 609,984.0 609,984.0 609,984 609,984 0.0 [CUDA memcpy Device-to-Host]

21.9 197,535 1 197,535.0 197,535.0 197,535 197,535 0.0 [CUDA memcpy Host-to-Device]

10.5 94,815 1 94,815.0 94,815.0 94,815 94,815 0.0 [CUDA memcpy Peer-to-Peer]

[8/8] Executing 'cuda_gpu_mem_size_sum' stats report

Total (MB) Count Avg (MB) Med (MB) Min (MB) Max (MB) StdDev (MB) Operation

---------- ----- -------- -------- -------- -------- ----------- ----------------------------

4.000 1 4.000 4.000 4.000 4.000 0.000 [CUDA memcpy Device-to-Host]

4.000 1 4.000 4.000 4.000 4.000 0.000 [CUDA memcpy Host-to-Device]

4.000 1 4.000 4.000 4.000 4.000 0.000 [CUDA memcpy Peer-to-Peer]

Generated:

/scratch/hpc-prf-agraph/p2p-test/report3.nsys-rep

/scratch/hpc-prf-agraph/p2p-test/report3.sqlite

So I can see that 4 MB of data is transferred from Host to Device, then P2P , then Device to Host All match with size in the last table so should be OK right. ?

Things get a bit complicated when I generate the report with this :

nsys profile --stats=true --gpu-metrics-frequency=200000 --gpu-metrics-device=0 ./a.out

I used 200 kHz for frequency to capture execution times more stable since it varies a lot when captured with default settings.

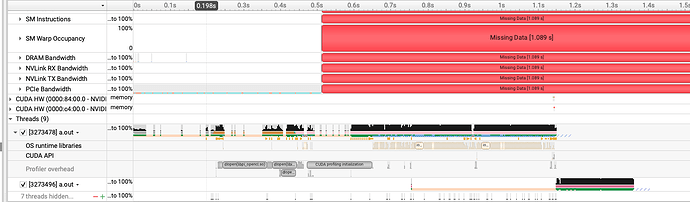

The generated nsys file does not have any activity with NVlink :